How do 87m records scraped from Facebook become an advertising campaign that could help swing an election? What does gathering that much data actually involve? And what does that data tell us about ourselves?

The Cambridge Analytica scandal has raised question after question, but for many, the technological USP of the company, which announced last week that it was closing its operations, remains a mystery.

For those 87 million people probably wondering what was actually done with their data, I went back to Christopher Wylie, the ex-Cambridge Analytica employee who blew the whistle on the company’s problematic operations in the Observer. According to Wylie, all you need to know is a little bit about data science, a little bit about bored rich women, and a little bit about human psychology...

Step one, he says, over the phone as he scrambles to catch a train: “When you’re building an algorithm, you first need to create a training set.” That is: no matter what you want to use fancy data science to discover, you first need to gather the old-fashioned way. Before you can use Facebook likes to predict a person’s psychological profile, you need to get a few hundred thousand people to do a 120-question personality quiz.

The “training set” refers, then, to that data in its entirety: the Facebook likes, the personality tests, and everything else you want to learn from. Most important, it needs to contain your “feature set”: “The underlying data that you want to make predictions on,” Wylie says. “In this case, it’s Facebook data, but it could be, for example, text, like natural language, or it could be clickstream data” – the complete record of your browsing activity on the web.“Those are all the features that you want to [use to] predict.”

At the other end, you need your “target variables” – in Wylie’s words, “the things that you’re trying to predict for. So in this case, personality traits or political orientation, or what have you.”

If you’re trying to use one thing to predict another, it helps if you can look at both at the same time. “If you want to know the relationships between Facebook likes in your feature set and personality traits as your target variables, you need to see both,” says Wylie.

Facebook data, which lies at the heart of the Cambridge Analytica story, is a fairly plentiful resource in the data science world – and certainly was back in 2014, when Wylie first started working in this area. Personality traits are much harder to get hold of: despite what the proliferation of BuzzFeed quizzes might suggest, it takes quite a lot to persuade someone to fill in a 120-question survey (the length of the short version of one of the standard psychological surveys, the Ipip-Neo).

“Quite a lot” is relative, however. “For some people, the incentive to take a survey is financial. If you’re a student or looking for work or just want to make $5, that’s an incentive.” The actual money handed over, Wylie says, “ranged from $2 to $4”. The higher payments go to “groups that were harder to get”. The group least likely to take a survey, and so earning the most from it, were African American men. “Other people take surveys just because they find it interesting, or they are bored. So we over-sampled wealthy white women. Because if you live in the Hamptons and have nothing to do in the afternoon, you fill out consumer research surveys.”

The personality surveys use those 120 questions to profile people along five discrete axes – the “five factors” model, popularly called the “Ocean” model after one common breakdown of the factors: openness to experience, conscientiousness, extraversion, agreeableness and neuroticism.

That model clusters personality traits into distinctions that seem to hold across cultures and across time. So, for instance, those who describe themselves as “loud” are likely to also describe themselves as “gregarious”. If they agree with that description this year, they’re likely to agree with it next year. That cluster is likely to show up in responses in every language. And if a person responds to it negatively, there are likely to be real, noticeable differences between them and people who answer it positively.

Those features of the model are what make it actually useful for profiling individuals, says Wylie – in contrast to some other popular psychological profiles such as the Myers-Briggs system. In the testing phase of the research, Facebook was barely involved. The surveys were offered on commercial data research sites – first Amazon’s Mechanical Turk platform, then a specialist operator called Qualtrics. (The switch was made, Wylie says, because Amazon has the issue that “people are overfamiliar with filling out surveys” – so much so that it starts to affect your results.)

It was only at the very end that Facebook came into play. In order to be paid for their survey, users were required to log in to the site, and approve access to the survey app developed by Dr Aleksandr Kogan, the Cambridge University academic whose research into personality profiling using Facebook likes provided the perfect access for the Robert Mercer-funded Cambridge Analytica to quickly get in on the field. (Kogan maintains that Cambridge Analytica assured him they were using data appropriately and says he has been “used as a scapegoat by both Facebook and Cambridge Analytica”.)

To a survey user, the process was quick: “You click the app, you go on, and then it gives you the payment code.” But two very important things happened in those few seconds. First, the app harvested as much data as it could about the user who just logged on. Where the psychological profile is the target variable, the Facebook data is the “feature set”: the information a data scientist has on everyone else, which they need to use in order to accurately predict the features they really want to know.

It also provided personally identifiable information such as real name, location and contact details – something that wasn’t discoverable through the survey sites themselves. “That meant you could take the inventory and relate it to a natural person [who is] matchable to the electoral register.”

Second, the app did the same thing for all the friends of the user who installed it. Suddenly the hundreds of thousands of people who you’ve paid a couple of dollars to fill out a survey, whose personalities are a mystery, become millions of people whose Facebook profiles are an open book.

That’s where the final transformation comes in. How do you turn a few hundred thousand personality profiles into a few million? With a lot of computing power, and a massive matrix of possibilities. “Even though your sample size is 300,000 people, give or take, your feature set is like 100m across,” says Wylie. Every single Facebook “like” found in the data set becomes its own column in this enormous matrix. “Even if there is only one instance in the entire set, it’s still a feature.”

“All that data was then put into an ensemble model,” Wylie says. “This is when you use different families or approaches of machine learning, because each of them will have their own strengths and weaknesses... and then they sort of vote, and then you amalgamate the results and come up with a conclusion.” This is where data science becomes more of a data art: the exact input of each approach to the overall model isn’t set in stone, and there’s no right way to do it. In the academic world, it’s sometimes called “training by grad student” – the point where the only thing to do is move forward through laborious trial and error. Still, it worked well enough, and in the end, Wylie says, “we built 253 algorithms, which meant there were 253 predictions per profiled record”. The goal was achieved: a model that could effectively take the Facebook likes of its subjects and work backwards, filling in the rest of the columns in the spreadsheet to arrive at guesses as to their personalities, political affiliations and more.

By the end of August 2014, Wylie had the first successful outputs: 2.1m profiled records, from 11 target US states, the plan being that they would be used to communicate and refine messages in Mercer and Steve Bannon-backed Republican campaigns leading up to the 2016 primaries (Wylie left before these). “What that number represents is people who not only have their Facebook data, voter data, and consumer data (which was all matched up), but also had an additional 253 predictions or scores that were then appended to their profile.”

Those 253 predictions were the “secret sauce” that Cambridge Analytica claimed it could offer its customers. Using Facebook itself, advertisers are limited to broad demographic strokes, and a few narrower algorithmically determined categories – whether you like jazz music, say, or what your favourite sports team is. But with 253 further predictions, Cambridge Analytica could, Wylie says, craft adverts no one else could: a neurotic, extroverted and agreeable Democrat could be targeted with a radically different message than an emotionally stable, introverted, intellectual one, each designed to suppress their voting intention – even if the same messages, swapped around, would have the opposite effect.

Wylie brings up the anodyne political statement that a candidate is in favour of jobs. “Jobs in the economy is a good example because it’s a meaningless message. Everyone’s pro-jobs in the economy. So in that sense, using just the message of ‘I am in favour of jobs in the economy’, or ‘I have a plan to fix jobs in the economy’, you cannot differentiate yourself from your opponent.

“But one of the things that we found was that actually when you unpack what is a job for different people, different people engage with constructs with different motivations and value sets that are interrelated with their dispositions.”

What that means in practice is that the same blandishment can be dressed up in different language for different personalities, creating the impression of a candidate who connects with voters on an emotional level. “If you’re talking to a conscientious person” – one who ranks highly on the C part of the Ocean model – “you talk about the opportunity to succeed and the responsibility that a job gives you. If it’s an open person, you talk about the opportunity to grow as a person. Talk to a neurotic person, and you emphasise the security that it gives to my family.”

Thanks to the networked nature of modern campaigning, in theory all these messages can be delivered simultaneously to different groups. Towards the end of the campaign, once the messaging has settled in, they can even be automated, Mad Libs-style, with an algorithm churning through a thesaurus to find the perfect combination of words to win over different subgroups.

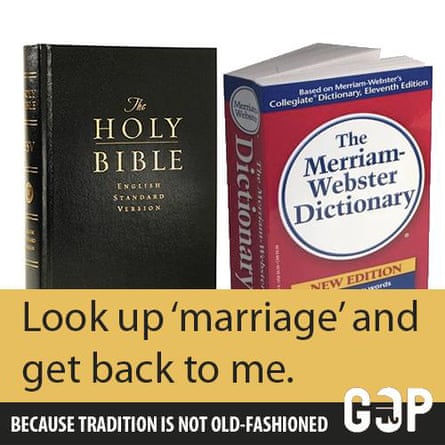

Of course, it isn’t all blandishments. One message used to boost rightwing turnout attacked same-sex marriage. “It’s funny, because this is so offensive and implicitly homophobic, but it’s a team of gays that created it,” Wylie says. “It was targeting conscientious people. It was a picture of a dictionary and it said ‘Look up marriage and get back to me’. For someone who is conscientious, it is a compelling message: a dictionary is a source of order, and a conscientious person is more deferential to structure.”

At a certain point, psychometric targeting moves into the realm of dog-whistle campaigning. Images of walls proved to be really effective in campaigning around immigration, for instance. “Conscientious people like structure, so for them, a solution to immigration should be orderly, and a wall embodied that. You can create messaging that doesn’t make sense to some people but makes so much sense to other people. If you show that image, some people wouldn’t get that that’s about immigration, and others immediately would get that.” The actual issues, for Wylie, are simply the “plain white toast” of politics, waiting for the actual flavour to be loaded on. “No one wants plain white toast.” The job of the data, he says, is to “learn the particular flavour or spice” that will make that toast appealing.

While this was undoubtedly a highly sophisticated targeting machine, questions remain about Cambridge Analytica’s psychometric model – ones Wylie, perhaps, isn’t best placed to answer. When Kogan gave evidence to parliament in April, he suggested that it was barely better than chance at applying the right Ocean scores to individuals. Maybe that edge is enough to matter – or maybe CA was selling snake oil. And even if individuals were correctly labelled with the five factors, is advertising to them based on that really as simple as slightly hokey-sounding appeals to love of order, or fear of the other?

That said, there’s clearly something in it. Take a look instead at a patent filed in 2012 on “determining user personality characteristics from social networking system communications”. “Stored personality characteristics may be used as targeting criteria for advertisers ... to increase the likelihood that the user … positively interacts with a selected advertisement,” the patent suggests. Its author? Facebook itself.

Comments (…)

Sign in or create your Guardian account to join the discussion