On Tuesday, Stability AI launched Stable Diffusion XL Turbo, an AI image-synthesis model that can rapidly generate imagery based on a written prompt. So rapidly, in fact, that the company is billing it as "real-time" image generation, since it can also quickly transform images from a source, such as a webcam, quickly.

SDXL Turbo's primary innovation lies in its ability to produce image outputs in a single step, a significant reduction from the 20–50 steps required by its predecessor. Stability attributes this leap in efficiency to a technique it calls Adversarial Diffusion Distillation (ADD). ADD uses score distillation, where the model learns from existing image-synthesis models, and adversarial loss, which enhances the model's ability to differentiate between real and generated images, improving the realism of the output.

Stability detailed the model's inner workings in a research paper released Tuesday that focuses on the ADD technique. One of the claimed advantages of SDXL Turbo is its similarity to Generative Adversarial Networks (GANs), especially in producing single-step image outputs.

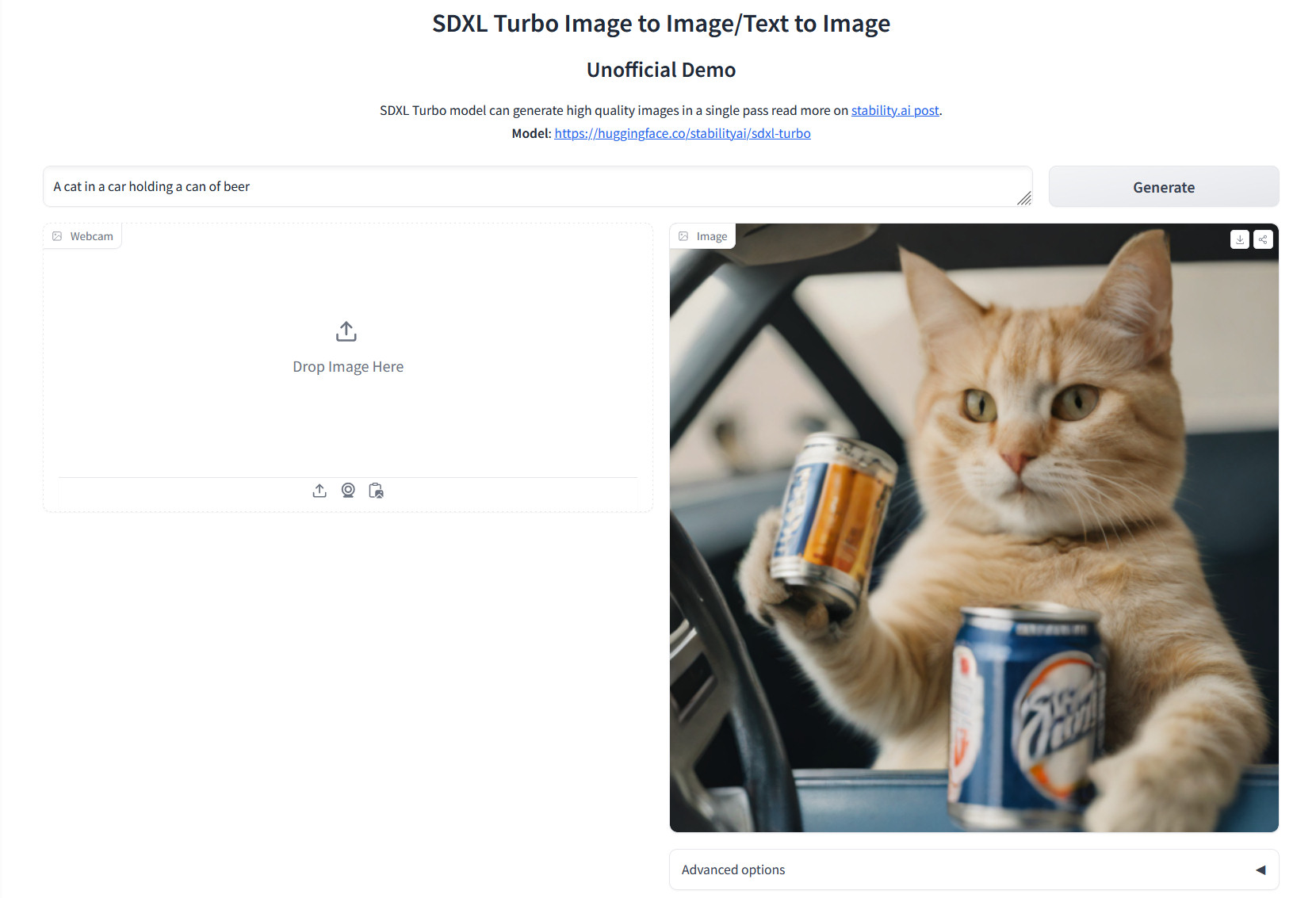

SDXL Turbo images aren't as detailed as SDXL images produced at higher step counts, so it's not considered a replacement of the previous model. But for the speed savings involved, the results are eye-popping.

To try it out, we ran SDXL Turbo locally on an Nvidia RTX 3060 using Automatic1111 (the weights drop in just like SDXL weights), and it can generate a 3-step 1024×1024 image in about 4 seconds, versus 26.4 seconds for a 20-step SDXL image with similar detail. Smaller images generate much faster (under one second for 512×768), and of course, a beefier graphics card such as an RTX 3090 or 4090 will allow much quicker generation times as well. Contrary to Stability's marketing, we've found that SDXL Turbo images have the best detail at around 3–5 steps per image.

Loading comments...

Loading comments...